5 – Digitalisation of exploration

Norway’s oil and gas accumulations are increasingly harder to find.

Technological progress and digitalisation have provided better data and tools which contribute to increased geological understanding and make it possible to identify new exploration concepts. Digitalisation also provides further opportunities to reduce exploration costs and enhance the efficiency of work processes. That can help to reduce exploration risk and increase discoveries.

The exploration business has a long tradition of handling large volumes of data through acquisition and processing of geophysical and well data. Exploring for oil is an industry which has moved boundaries for digital technology. Seismic surveys generate enormous quantities of data, and use some of the biggest supercomputers and computer clusters available at any given time for processing and analysis.

For many years, advanced modelling and simulation, 3D visualisation and automated geological interpretation have been part of the toolbox for specialists in the exploration business.

Oil and gas exploration

moves boundaries

for digital technology

Increasing data quantities

Data quantities are growing fast

The NPD is responsible for maintaining knowledge about the petroleum potential of the NCS, serving as a national continental shelf library and disseminating facts and knowledge. That includes making information from all phases of the industry easily accessible, and communicating facts and specialist knowledge to government, industry and society at large (figure 5.1).

The NPD’s many years of acquiring and making data publicly available – in part through its Factpages – have given the NCS a competitive advantage in relation to many other petroleum provinces, where securing access to information is more demanding.

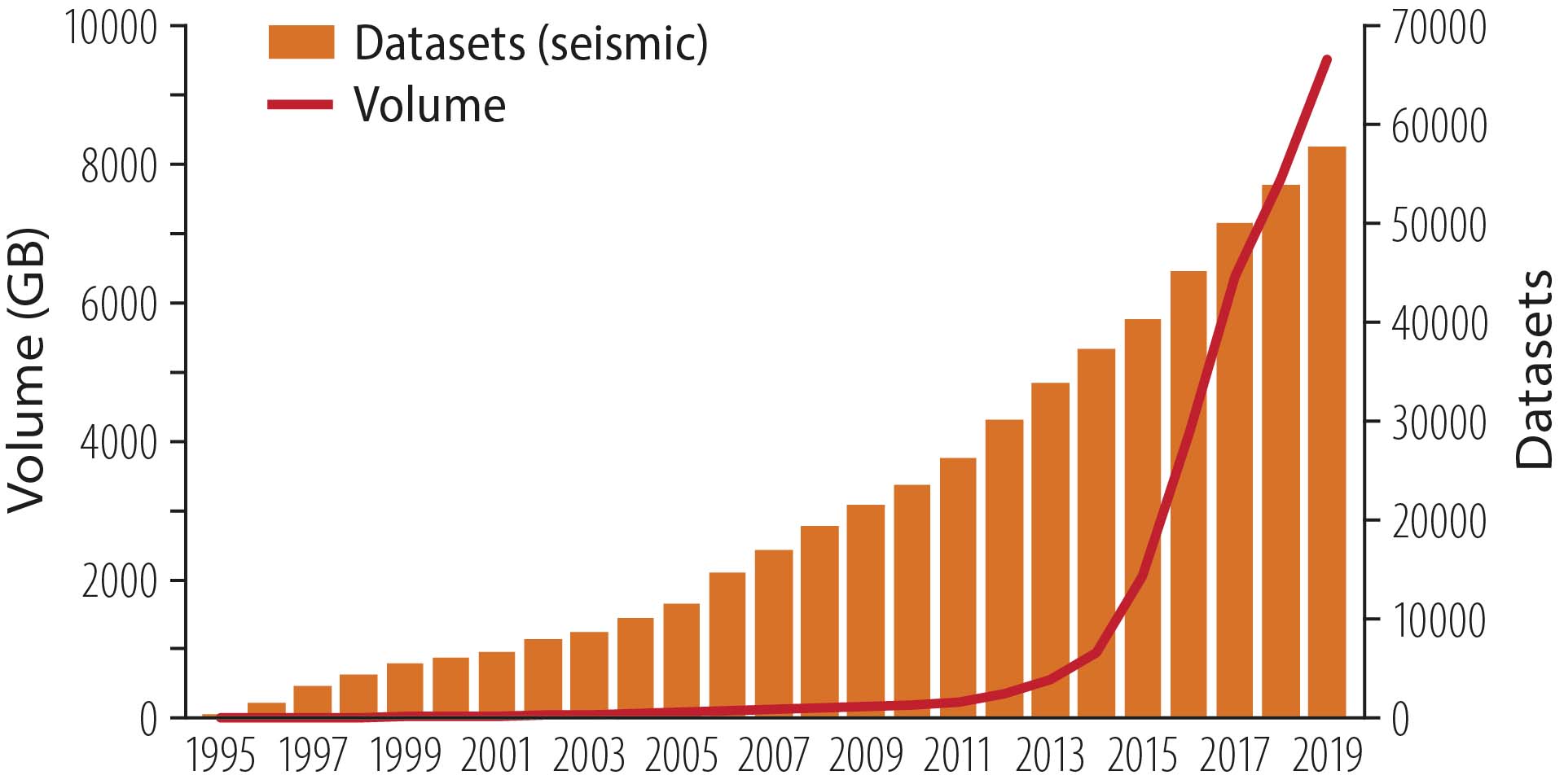

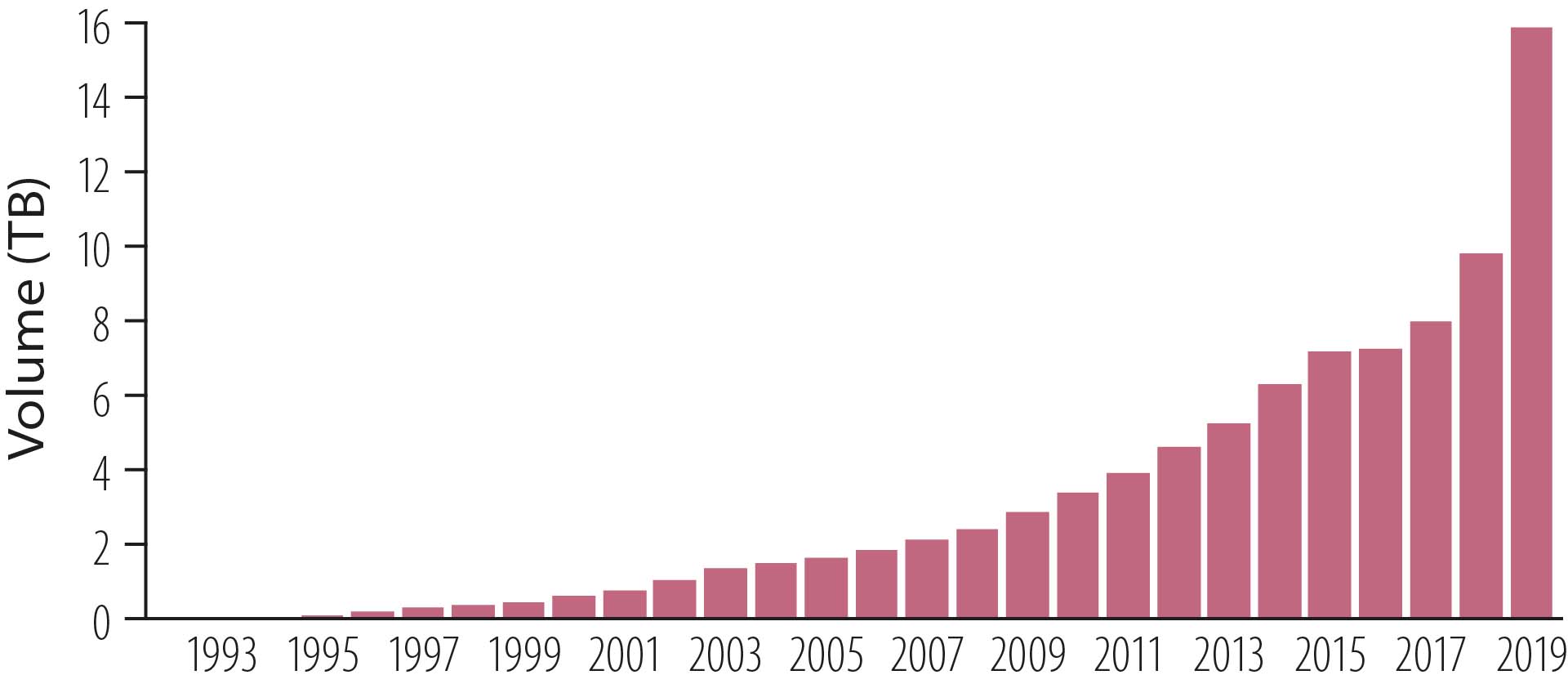

The Diskos national data repository for seismic and well information on the NCS (fact box 5.1) held some 10 000 terabytes (10 petabytes) of data at 31 December 2019 and is expanding rapidly (figures 5.2 and 5.3). These large quantities of data provide a unique basis for analysing opportunities which can help in making new discoveries.

Figure 5.1 Communicating facts

Figure 5.2 Development of seismic data volume in Diskos, 1994-2019

Figure 5.3 Development of well data volume in Diskos, 1994-2019

Making data accessible

Big Data analyses based on machine learning (ML) and artificial intelligence (AI) may provide new information and insights. More and better data, tools and methods can increase geological understanding of the sub-surface and identify new exploration concepts. This is conditional on easy access to digital data.

More than 50 years of data acquisition and technology development on the NCS have yielded a number of different software tools for data processing and a multitude of partly incompatible file formats, databases and storage systems. The result is that information is difficult to retrieve from one system for use in another, while collating data acquired by different disciplines for cross-disciplinary analysis is challenging.

Information can often also be poorly structured and lack metadata, making it hard to detect relationships between objects and assess data quality. Both storage formats and quality problems can hamper efficient Big Data analyses. Easy accessibility to information is crucial for exploiting the value potential offered by AI and Big Data analysis.

Data must therefore be conditioned – in other words, converted to a format which everyone can use and machines can read. The commitment required for this has been underestimated, and must be given priority if the value potential is to be realised.

Data must be made available

to all and conditioned

for machine reading

Several projects have been initiated to improve the quality of data and make them machine-readable. One example is a project launched by licensees on the NCS, through the Norwegian Oil and Gas Association, to digitalise drill cuttings information from around 1 500 wells and make this available in Diskos.

Several projects have been initiated by the repository to make data machine-readable (fact box 5.2), and Diskos is continuously seeking to develop the database further by adopting new technology and improving ways of working. A priority is to determine how ML and AI can help to improve data quality, particularly for old information and large volumes of unstructured datasets.

Work is also under way at the NPD to make other data available in digital format for analysis. One project aims to digitalise palynological (microfossil) slides submitted to the NPD (figure 5.5 and fact box 5.3)

Fact box 5.2 - Machine-readable core data

Fact box 5.3 - AVATARA-p – advanced augmented analysis robot for palynology

The NPD has also participated in a project with Britain’s Oil and Gas Authority (OGA) and the Oil and Gas Technology Centre (OGTC) in Aberdeen to study analysis methods which can quickly and accurately assess structured and unstructured well data. This aims to use the information to identify and classify intervals which might indicate the presence of overlooked petroleum accumulations (fact box 5.4). Part of the work involves organising and cleaning data so that they can be used in analyses.

Intensive efforts are being devoted by a number of industry players to establish data platforms which can liberate information from its original formats and storage systems, and make it more available to applications. Key elements here are developing robust and standardised data models and application programming interfaces (API) for data sharing.

Several of the big companies have joined forces to establish the cloud-based open subsurface data universe (OSDU) platform, which has quickly attracted wide support. The aim is to make all global exploration, production and well data available in the same format on a data platform.

Benefits will include even better opportunities for Big Data analyses and improved base data for ML and AI. The group has attracted 133 oil, service and technology companies as members, including BP, Chevron, ConocoPhillips, Equinor, ExxonMobil, Hess, Marathon Oil, Noble Energy, Pandion Energy, Shell, Total, Woodside and Schlumberger.

Fact box 5.4 - OGTC/OGA/NPD project

Big Data analysis

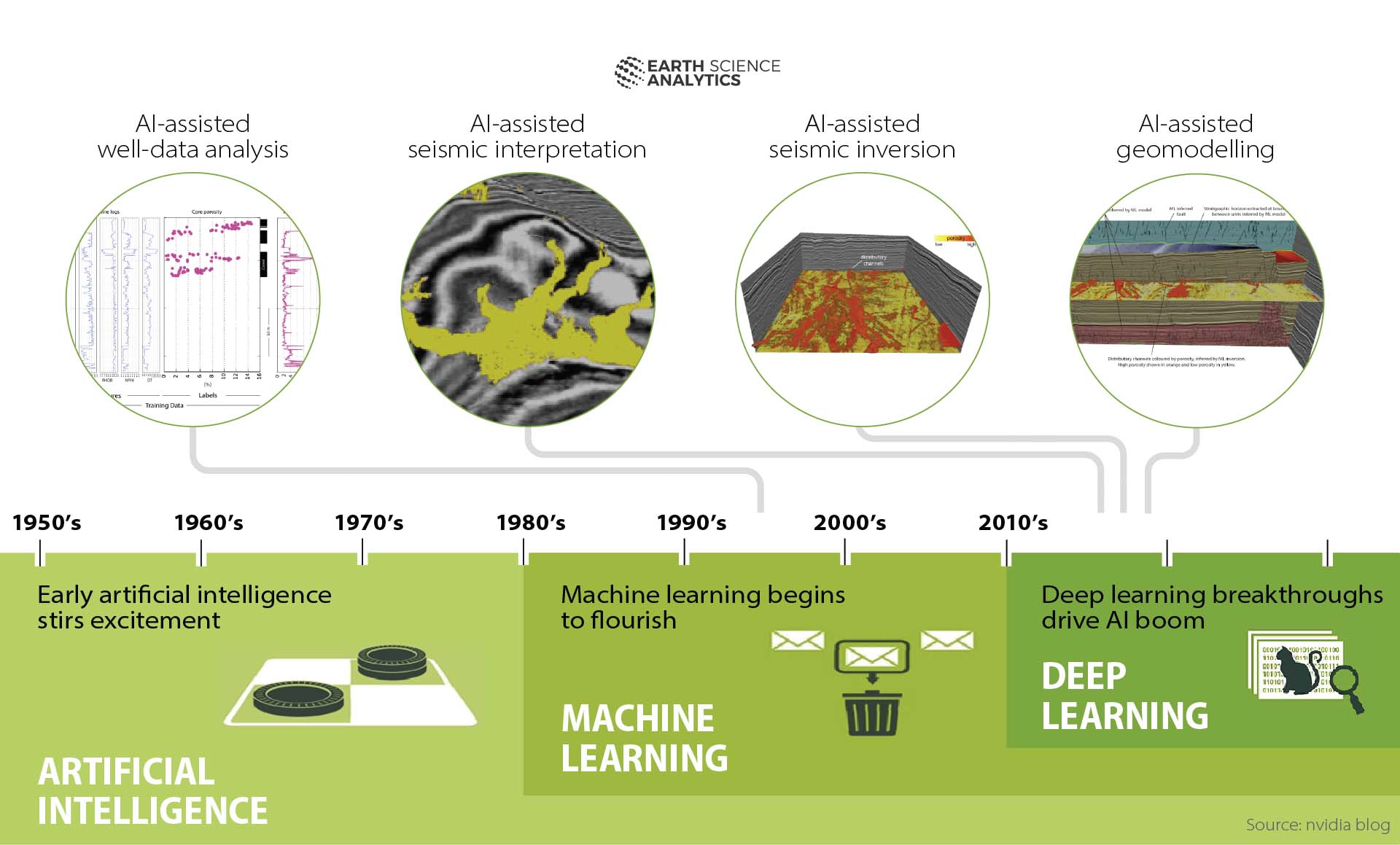

Big Data analysis has emerged to meet the need for identifying trends, patterns and significance in the huge quantities of information being generated. This is often done without ties to specific datasets, but across them. Development over time is presented in figure 5.9 [17]. Many of the techniques or methods involved, such as data mining, ML and deep learning, are variants of AI.

Generally speaking, data mining refers to the process of extracting information or knowledge from raw data. ML is a sub-sector of the broad AI field, and refers to the use of specific algorithms to identify patterns in raw data and present relationships between the latter in a model. Such models (or algorithms) can then be used to draw conclusions about new datasets or to guide decisions.

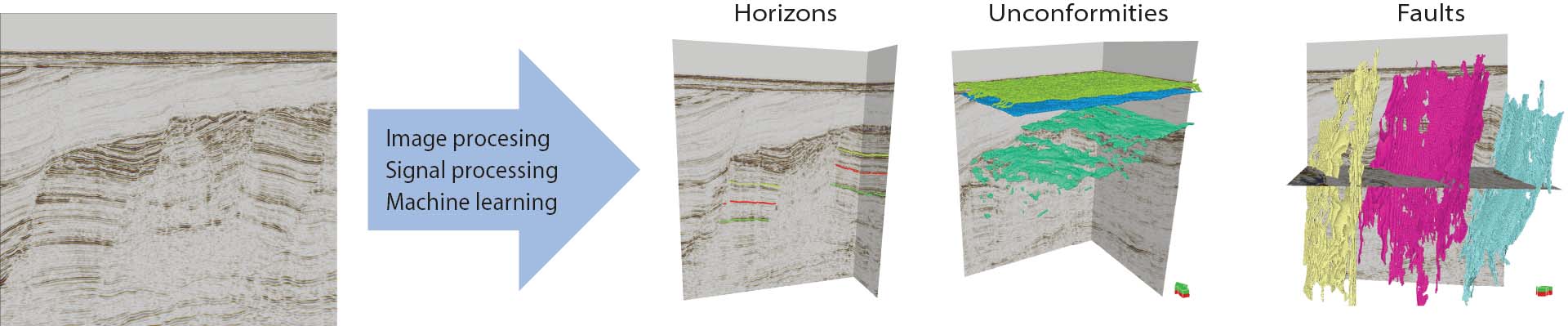

ML is a well-known term in exploration. Auto-interpretation of seismic data is one example, which has existed for many years and is illustrated in figure 5.10. ML models can also be constructed from well data and used to predict reservoir properties in the sub-surface. This methodology can also help to improve incomplete datasets by predicting missing information.

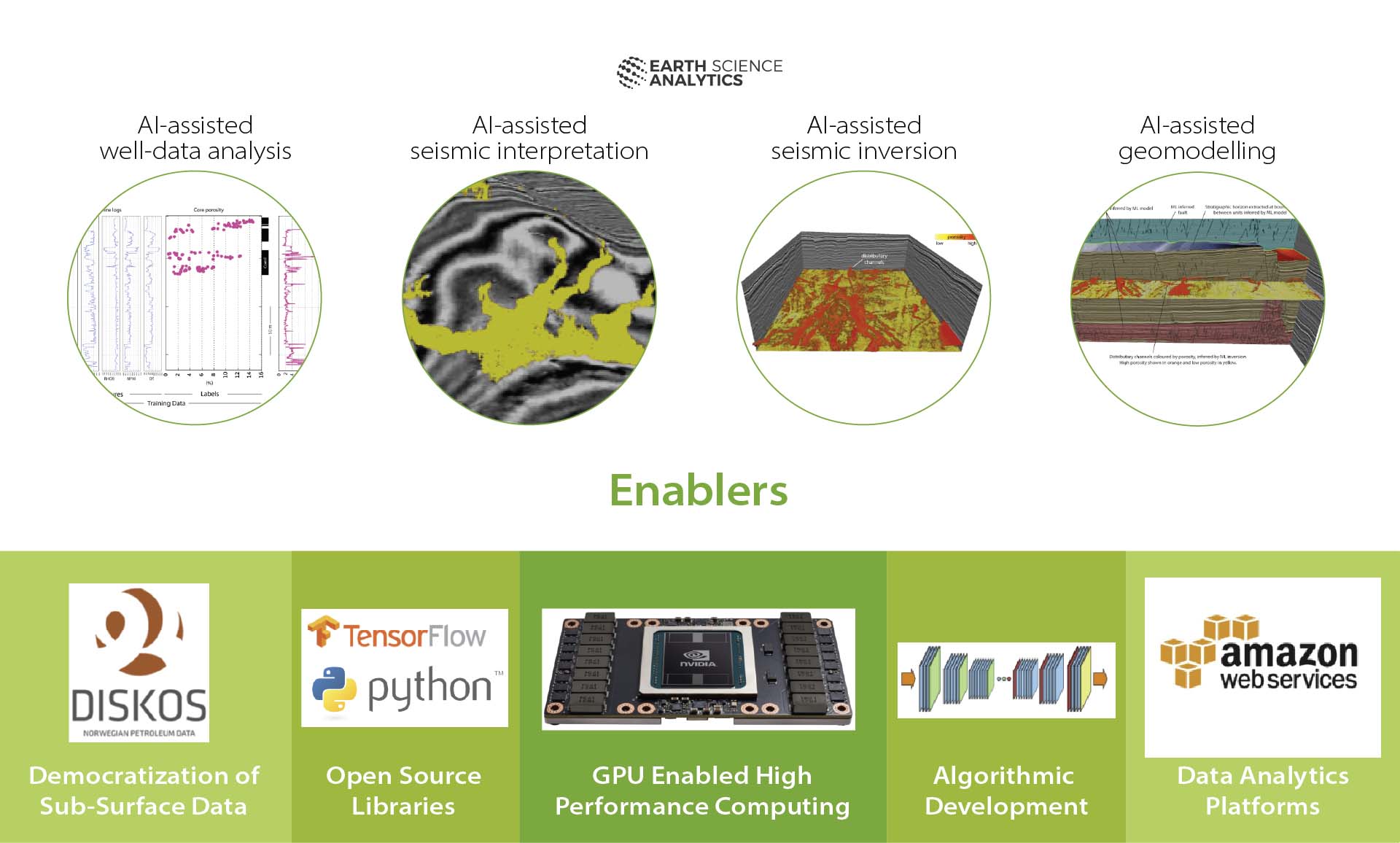

Figure 5.9 AI and data analysis. Source: modified from Earth Science Analytics and Nvidia blog (2018).

Figure 5.10 Automated seismic interpretation. Source: Lundin Energy.

Substantial value potential

Realising the value potential

So far, the industry has probably only scratched the surface of what could be achieved through digitalisation in the exploration sector to reduce uncertainty, enhance efficiency and find more oil and gas.

Rapid advances in recent years mean that an ever-growing number of players have become aware of the opportunities, and great agreement has eventually emerged in the industry that a big potential exists for digitalisation. To succeed, however, it is not enough for the players to invest in their own projects.

They must also be able to collaborate with both partners and competitors, be willing to share data, knowledge and technology, and assess their own business model and role in the market. Data utilisation could also present challenges – in an ML context, for example – because of the Copyright Act, even when the information is no longer confidential.

It is important that the industry and the government can now find solutions which help to make released data as open as possible. Failure to accomplish this could mean the value potential fails to be realised or takes a long time to come to fruition (fact box 5.5).

"Companies must collaborate

and be willing to share data,

knowledge and technology"

The government also plays an important role in helping to customise and share data and knowledge, so that value creation for society is maximised (fact box 5.6).

In the wake of the KonKraft report (fact box 5.5), greater attention has been paid to sharing various types of data and the effects of this. The government has assessed how far the status reports (fact box 5.7) should be made public.

Fact box 5.5 - KonKraft (competitiveness of the NCS)

Fact box 5.6 - Sharing data creates greater value for society than for a single company

Publishing status reports could contribute to more cost-effective exploration. This would improve data access for companies thinking of applying for licences in previously awarded areas. It would also ensure a minimum of experience transfer from earlier licensee groups to their successors, and communicate details of more recent data and studies which new licensees might consider acquiring.

As a growing share of the NCS becomes more mature, new production licences will increasingly include acreage already licensed once or more (figure 2.13).

In some cases, companies are awarded acreage which has been evaluated in detail by previous licensees. On certain occasions, it could make sense for new licensees to re-evaluate the area using new approaches. In other cases, such a reassessment may prove to be a duplication of earlier work which fails to provide new knowledge.

Making status reports public can contribute to more cost-effective exploration in that new licensees and others benefit from work done and experience gained in earlier licences covering the same area. That will help to make exploration more efficient both through increased competition and a variety of ideas, and through reduced exploration risk. This confers a socioeconomic benefit. The MPE has published proposals for amending the regulations, including the release of status reports, with a consultation deadline of 1 November 2020.

Figure 5.11 Factors for realising the value potential related to digitalisation in the exploration phase. Source: modified from Earth Science Analytics (2018)

Democratisation of sub-surface data

Most requirements are in place on the NCS for realising such socioeconomic gains. The most important factors are illustrated in figure 5.11 [17]. General and simple access to sub-surface data through the NPD, Diskos and other sources allow specialists to experiment with new analysis methods and techniques, and to build data science into their model structure.

This presupposes that the industry agrees on standards which permit interoperability (fact box 5.8) and which ensure that functions embedded in company-specific systems can interconnect. In other words, the flow of data between players and applications is simplified, interpretation of shared information is supported and the benefit of datasets assembled across companies and partnerships is made available.

Interoperability requirements ensure that players in the industry avoid dependence on a single supplier of platforms and thereby getting locked into their technology choice.

Fact box 5.8 - Interoperability

Open-source library

It has also become more common to release source codes, and both general and geoscience-specific open-source libraries have emerged. Access to these makes it considerably simpler to use ML in exploration-related geoscientific analyses.

Algorithm developments

The large size of modern datasets has sparked an explosion in new methods and techniques for information retrieval. As a result, the industry has access to a growing “new” supplier sector through a competent ecosystem of developers and companies producing new algorithms, based in part of the increasing quantity of sub-surface data.

The existing supplier industry has also adopted innovative digital tools and working methods. And the big cloud companies offer advanced tools and services for analysing and modelling large datasets.

Data analysis platforms

A great many different data platforms contain important information about the sub-surface on the NCS. Compiling these into a single large open platform would probably make data access more efficient. Such a compilation can be used with advanced ML and algorithms to combine countless databases into a form of hub.

Computing power

The new methods for Big Data analysis are made possible in part by rapid advances in computer processing power and speed, and by combining devices to permit high-performance computing (HPC). This is increasingly offered by the cloud companies, partly in order to optimise data analysis and hopefully shorten the time between exploration and first oil or gas production.

Big computer resources will be required if very substantial quantities of data are to be processed, or if they require large parallel calculations. So far, the most cost-effective approach has been to establish this in a supercomputer centre [19].

Supercomputers require heavy investment. According to the industry, however, they could reduce the time from awarding a production licence to making a discovery by many months and cut costs substantially through fewer dry wells. Since establishing supercomputers gives economies of scale, it has largely been the big petroleum industry players who have established data centres with such machines. These normally run at full capacity around the clock.

Eni, Total and Petrobras have all recently upgraded their supercomputers and greatly increased available processing power. In addition to the big oil companies, the seismic survey sector makes substantial use of its own supercomputers. Small oil companies which only need heavy computing capacity at intervals, rent this either in the cloud or from specialised players.